We implemented 2 solutions (the other was WordPress, which worked, sort of) bc OwnCloud wasn’t working out at the time, and I didn’t know why, so I fell back to WordPress. OwnCloud was encountering problems starting on Swarm. Both solutions had issues that required you be familiar with WordPress’s PHP and OwnCloud’s bash startup scripts, respectively, to investigate the issue. But I didn’t realize that OwnCloud’s problems were related to Bash startup scripts at the time, so I went with WordPress, since I was slightly familiar with the PHP configuration of WordPress. But here, I finish what we started.

The Problem

The problem… More or less…

Excluding the Proxmox HA part of project…

(if I had the time to debug OwnCloud, on Docker Swarm):

Run any “containerized” web application of your choice such as a collaboration

solution (think OwnCloud, Mattermost, Zimbra).Containers can be deployed using [Swarm] or [Kubernetes]. Containers must have distributed storage volumes (eg. A file uploaded to Owncloud is available on ALL replicas in the Cluster) The following must be demonstrated to receive marks:

Show that there are 3 VM nodes running your Containerized solution. Demonstrate the uploading of a file to your application (eg. WordPress/Owncloud) and that the file is accessible by all node Ips. Submissions:

1.) Report (25 Marks)

Quality and feasibility of the report. Will management accept your conclusions?2.) Technical Design (25 Marks)

Is the design well thought out or does it look like a “fouled computer run?”3.) Implementation (25 Marks)

How well did you execute what you described in your report and design.4.) Presentation (25 Marks)

Be sure to prove that the solution works by accessing it and verifying that HA is working (if you do not present live then you must record a video

showing HA works).All 4 deliverables must be uploaded as pdf to the dropbox for this project

Solution #1: OwnCloud on a cluster

For Some Things, the Internet Sucks

There is a lot of documentation on Internet on how to deploy OwnCloud on a docker container.

There is not, on how to deploy it on a cluster, like Docker Swarm.

Probably b/c neither was explicitly designed to be implemented on a cluster, and as I learned, some design decisions in a even a simple application, can hinder horizontal scaling.

Today we will go over the OwnCloud deployment on Docker Swarm cluster, bc if you didn’t read the instructions, it was going to be a pain to deploy the fileshare over NFS. I didn’t read the instructions. I do what I do every morning I wake up, and feel my way around like a blind man.

Containers are a form of black magic I will not speculate how it works, b/c though my speculation of how things likely works is a great mnemonic, speculation attracts trolls like you wouldn’t believe. Better than gold even. So, if you want to know what a container is, I refer you to the official explanation, until I can figure out a way to speculate without attracting trolls.

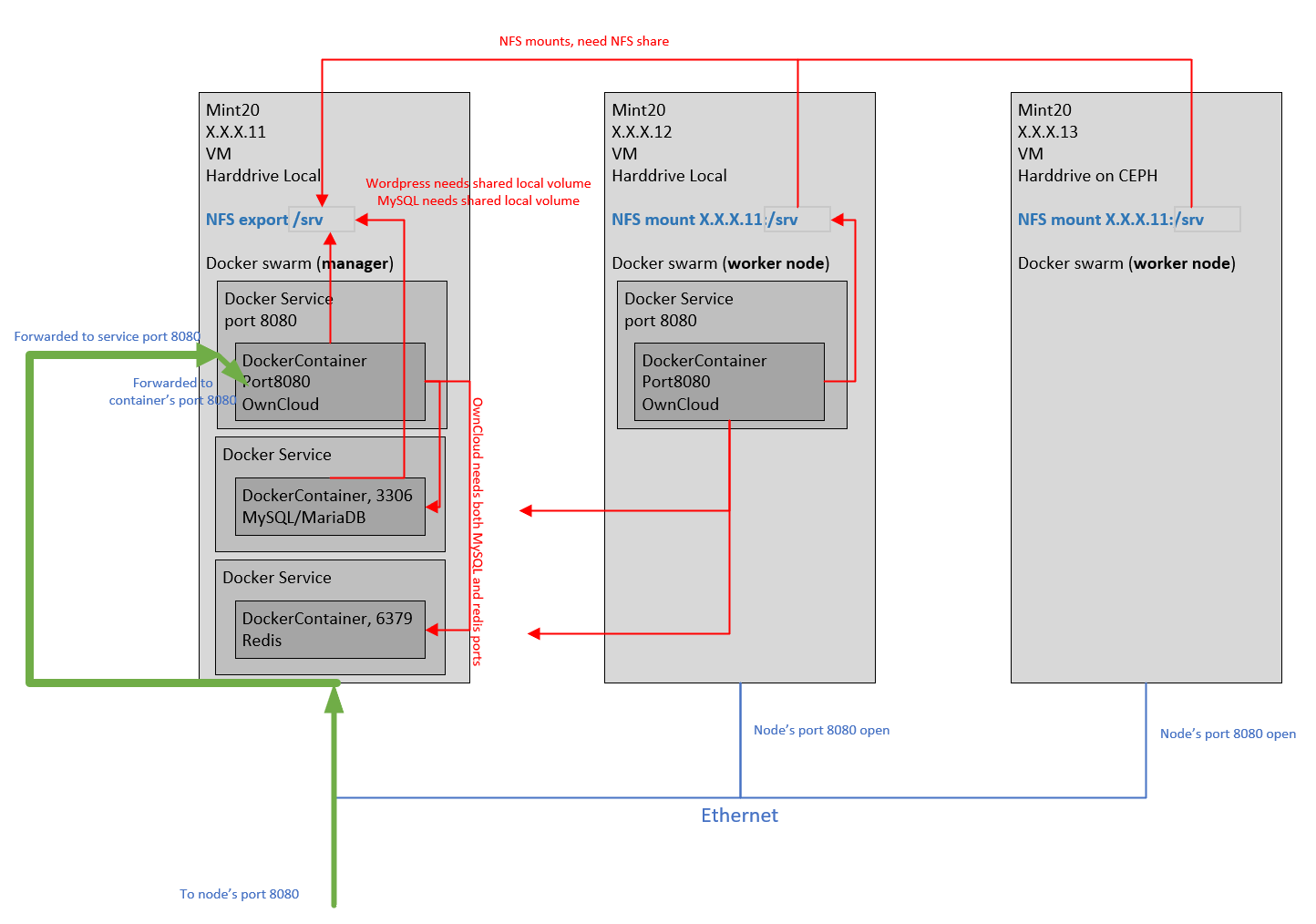

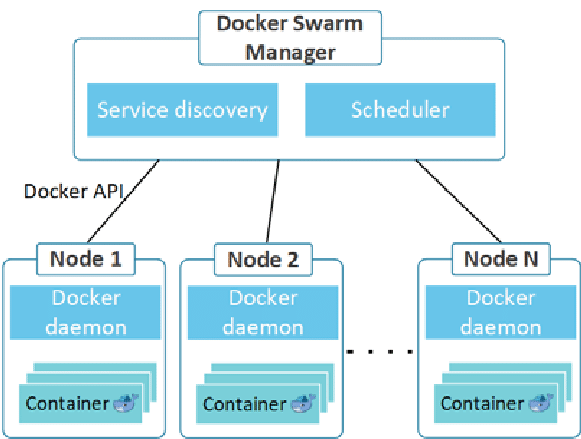

Docker Swarm is Docker containers, but clustered with other worker nodes(other Linux machines with docker). Below was ripped off the internet of what Docker Swarm architecture looks like:

Just want to try OwnCloud in docker?

This isn’t the easiest deployment. This instructions is meant to deploy something existing in a cluster between 2 or more machines.

if you just want to try OwnCloud, go here and run these instructions and it will be working in 10min.

First you need a Docker Swarm cluster, before installing OwnCloud. Have a machine or VM ready. You actually need 2 or more to have a real cluster. But we can start with one, just to get started. Install it with your favorite version of Linux. Trust me, give it a static IP address, but make sure it still has internet access. Now install:

- git, so you can clone my shell scripts

- docker, so you can run containers

- nfs, so the containers have somewhere to save data to

You can install all 3 by executing the command below.

apt install git docker-compose nfs-kernel-server

The necessary software platforms and tools are now installed.

Create NFS share for containers to use

What is NFS?

NFS is the protocol that many Unix based systems use to share files over a network. Windows has the same thing under the marketing name of “Windows File and Print Sharing”, but is also known as SMB. Both have the advantage of superficially appearing as if the files are located on the local filesystem.

We will configure one machine as a file sharing server, for the containers, and docker swarm worker nodes. If you only have one machine, you might not even need to do this step, but it won’t hurt either. The NFS shares are generally for the worker nodes, that have containers that expect a local volume.

Create a “/srv” directory, on the VM you installed before with NFS, docker, and git. This is the shared folder, for other computers on the network, aka the Docker Swarm worker nodes.

sudo mkdir /srv

Configure NFS export. Make sure you change the ‘X’ to the first 3 octets of the static IP address you selected for this machine. This means only machine with IP addresses that match the first 3 octets, are allowed access to this share. I don’t want to explain the rest of the options b/c I forgot what they mean.

echo '/srv <allowed_ip_mask, ie. X.X.X.0>/24(rw,sync,no_subtree_check)' >> /etc/exports sudo exportfs -ra

NFS share, done!

Create a Docker Swarm Cluster

On the same Linux server that we configured, we now create the Docker Swarm Manager.

Remember when I asked you to give your machine a static IP address?

sudo docker swarm init –advertise-addr <machine static IP>

The above command executed on the Linux VM, tells Docker to start a swarm cluster, with this machine as the Swarm Manager.

Congratulations. You have created a brand new Docker Swarm manager.

About your worker nodes, they need to have static addresses, too. And you need to copy the join message that the command above gave you, on the worker nodes. It should look similar to the command below:

Do you have another VM ready, as the worker nodes? Have you joined the worker nodes yet, with the command output by the “docker swarm init…” command above? No? It can’t wait. Let’s do it now. The reason is b/c this (my) OwnCloud container configuration expects a local volume, it can create a directory for. And the containers won’t be able to start, unless the local volumes are configured on the Swarm worker nodes. So we might as well configure the volumes, at same time that we install the Swarm Worker nodes.

More importantly, we want all the containers to have access to the same local volume, b/c we want all the containers to show and share the same files. This worker node configuration makes the directory seem like all the files are the same.

Why local volumes?

B/c we want it to work as closely to existing published configurations as possible. Some applications were never designed to access files via network file share. (I suspect MySQL is one of those) And it will crash, if it attempts to access shared files over network.

This isn’t a trivial configuration change, and without a credible published procedure, it takes time to trial and error whether the application can actually operate over different configurations

This solution hacks up a NFS mount, to give access to a remote volume, that appears like a local volume to the container. Is this normal? How do I know? You can use a NFS volume in docker, but who wants to fuss around with that, for a school project?

But yeah, ideally in a cluster, the OwnCloud frontend containers load their shared files over NFS volumes, not local volumes. I’ll leave this for another ambitious engineer to work out.

Configuring the Docker Swarm Worker Nodes

Need another machine or VM ready installed same version of Linux as manager. It also needs a static IP address. Now install

- docker, so it can join the cluster

- nfs, so the containers have somewhere to save data to

The command below will install both. After, you install Linux on the VM that will be a Swarm Worker node, run on it:

sudo apt install docker-compose nfs-common

Now create the “/srv” directory on (what will be) the Swarm Worker node, and mount the NFS share:

sudo mkdir /srv showmount -e <NFS static IP, ie. X.X.X.11> echo '<NFS static IP, ie. X.X.X.11>:/srv /srv nfs defaults 0 0' >> /etc/fstab sudo mount -a

The IP address above, if you followed my instructions, should be the Swarm Manager’s IP address. But you can choose to separate your NFS server, from your Swarm Manager. Then the NFS server and Swarm Manager will have different IP addresses, and the above configuration is for the NFS static IP address. And below when you see the “…swarm join…” statement, it should container the Swarm Manager’s static IP address. But my instructions, they are the same.

The NFS share is configured. You should be able to save anything in “/srv” and see it on the manager now, in the same directory.

touch /srv/test

Now you have to run that join command copied when you created the swarm manager.

Now, after the Docker Swarm configuration is created, we can return to installing OwnCloud containers.

Installing OwnCloud on Docker Swarm Cluster

Return to that first machine, the Docker Swarm manager. The one where we changed “/etc/exports”. That machine is both Swarm Manager and NFS server. But the NFS configuration is done, and now we focus only only configuring the containers. And that has to be done on the Swarm Manager. We will now install OwnCloud containers on the Swarm Manager.

OwnCloud is a opensource file sharing website, that is similar in functionality to Google Drive and Dropbox and Amazon S3, without the value-adds of interoperability with editing-in-web software and collaboration with other systems.

This how what they say OwnCloud is architected:

A “docker-compose.yml” is a configuration file for a stack of containers and the software to be installed in them. I got my “docker-compose.yml” from this gentleman’s blog, if you want to read it. They are excellent instructions for a single docker container running on a single VM.

https://doc.owncloud.com/server/next/admin_manual/installation/docker/

Though, if you want it deployed on a Swarm Cluster, you will need to know the “docker stack deploy…” command documented in link below, to create the service, which can move between the cluster nodes, and create the containers on the node.

https://hub.docker.com/_/owncloud

My examination of “docker-compose.yml” configuration, thinks my diagram below, might be more specific.

This can all be done in a shell script. Let’s download it from my github repository.

git clone https://github.com/studio-1b/OwnCloud-on-DockerSwarm.git

Now it downloads scripts to make it easier to configure the containers in the right order. It should have these files, in a “OwnCloud-on-DockerSwarm” directory:

docker-compose.yml

killdocker.sh

…

What does it do?

The “docker-compose.yml” file is copied from the site documented above, which gave the instructions on how to create services from any “docker-compose” file.

What is a chown flag?

This is a OwnCloud specific configuration.

On a local volume, OwnCloud startup tries to execute the unix command “chown” to give itself ownership on the directories that contain the shared files. But “chown” cannot be done on shared files over NFS. It will cause an error. So the “docker-compose.yml” I downloaded, initially allows to it to do this, since it was configured for local volume access. It allows the first instance to configure everything, they way it believes it should. But then the install.sh creates a new “docker-compose-disable-chown.yml” and correctly sets the flag for OwnCloud not to run chown, for subsequent containers. Since the first container created the folders and permissions correctly, the subsequent folders don’t need to. And it uses this “docker-compose-disable-chown.yml” to update the same service and containers, not to run chown. This allows the OwnCloud containers to run on the worker nodes, accessing files over NFS.

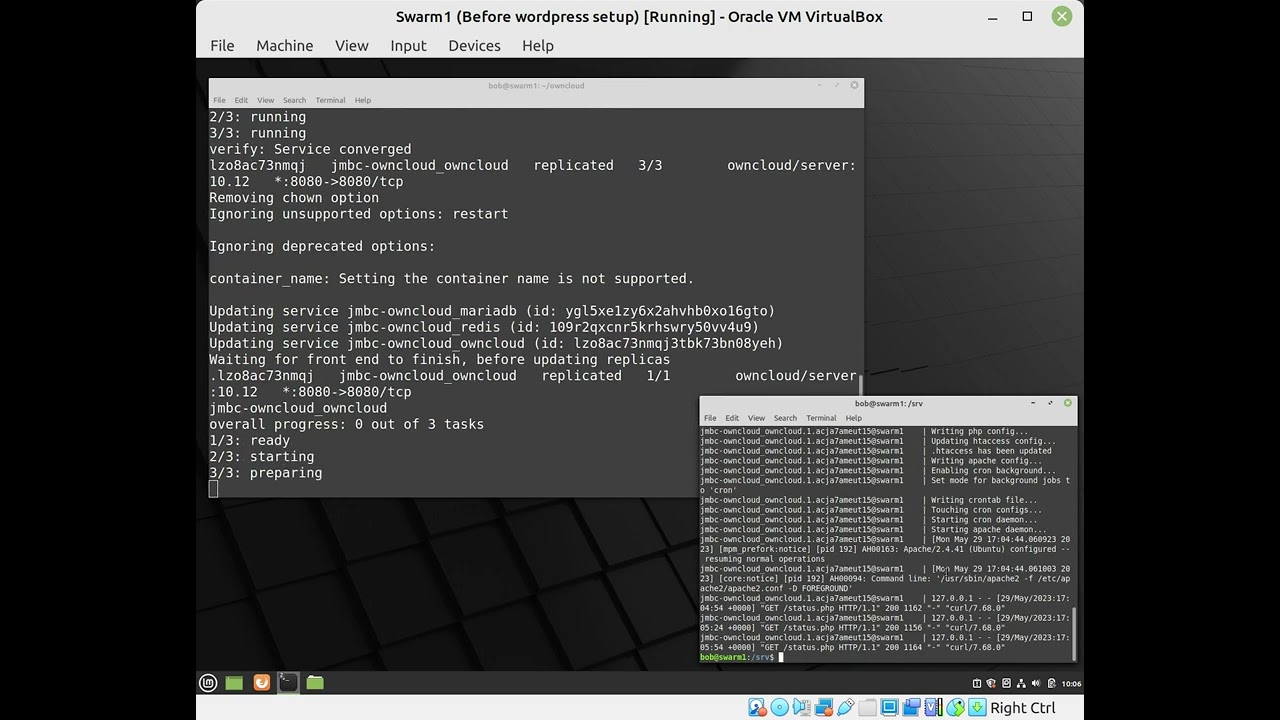

But the “install.sh” does the fine tuning, and is the file you will run, and it will execute the steps required to install OwnCloud on the Docker Swarm cluster. It pulls the docker images for OwnCloud. It then creates the directories that the volumes expect, and gives the containers permission to create subdirectories. It updates the environment variables to allow OwnCloud to allow ingress for 3 IP (explained below in aside for trusted_domains). It waits until the services are up, then it updates the chown flag on the OwnCloud configuration, and then updates the service, then increases the number of frontend container replicas to 3 (b/c my script expects 3 worker nodes and I think Swarm will try to distribute them across all the nodes).

The “killdocker.sh” simple uninstalls the OwnCloud containers, from the Docker Swarm.

So run the command below now, so it can install OwnCloud. As of 5/27/2023, this script should work w/ the OwnCloud Docker Image pulled from the internet.

./install.sh

The installation process should look like:

After the script finishes, the commands below will first show what manager/worker nodes there are. And then what services on running on the current manager node. You will add on the node name at end of command, to show the services running on that node.

docker node ls docker node ps <node name>

You can see where the containers are distributed. The MySQL container seems stuck on the swarm manager, until I can figure out what is going on with the it, unable to access the files on NFS shares. If you’re interested, you can actually see error in the log, by entering the command below. You will see if it tries to start MySQL on a worker node, that it crashes trying to access database files. It only succeeds, when the files are actually local. Which is on the Swarm manager.

docker service logs jmbc-owncloud_mariadb

But you should be able to access the OwnCloud website, via

http://localhost:8080

or

What is a “trusted_domains” configuration?

This too, is a OwnCloud configuration you set in the “docker-deploy.yml”. This controls what hostname it will allow to access the website on. By default, you can open OwnCloud by typing in “http://localhost” but not “http://<static_address>”. This is b/c OwnCloud has implemented a sort of protection for multi-homed websites.

Multi-homed web servers are web servers serve several domains, even though the machine has fewer IP addresses. Normally a simple webserver serves HTML pages for 1 website, for one DNS address, mapped to 1 IP address.

A multi-homed website might have as few as 1 IP address, with several DNS addresses assigned to it. And each DNS address has separate and different websites. http://multihomedsight1 and http://multihomedsight2 might both goto 142.54.13.11, but serve out 2 different websites.

Web servers can do this thru a few mechanisms. The most standard way I’ve learned is thru a HTTP header called “Host”. I’ve also hacked a HTTP request to work by submitting “GET http://multihomedsight2/page24.html”. But whatever you type in a browser address name, it puts it into a HTTP header called “Host”. That way, a multi-homed web server always knows what website you want, even though it holds multiple servers. The configuration sometimes goes by different names. In Apache, it is called a Virtual Host or vhost. In IIS, I think it is called a Site Binding.

So “trusted_domains” in the “docker-compose.yml” file is list of entries in the “Host” HTTP header, that the OwnCloud website will accept. And since that is what you type in the web browser, this is normal. It only becomes a problem when it becomes mismatches, and when it is, then instead of you getting someone else’s files, it tells you there is an error.

It is not, as it sounds, a security feature. It isn’t a security feature at all. Or shouldn’t be.

http://<static ip address of any of the nodes>:8080

Have fun! You’re done installing OwnCloud on a Docker Swarm cluster

You now have a cluster serving OwnCloud. The initial login for OwnCloud containers, configured in the environment variables in “install.sh”, is:

Password: admin

Trusted Domains

If you get an error about not being on a trusted domain, when you access OwnCloud thru a node IP address “http://<Swarm Node IP>:8080/”, then look at the “trusted_domains.txt” file that was downloaded from Github. View the contents, and see if it lists the IP address above. If it doesn’t, update the file to contain the address, and un-install and re-install OwnCloud from my script.

That file, is actually loaded by “install.sh” into an environment variable, that the “docker-compose.yml” file reads, to set in the container, that when it starts, it will configure the a “…overwrite….php” PHP file to add those as valid “Host” HTTP headers that it will receive. This file overrides, the “…config…php” file. Yes, it took a long time to figure out, that a simple environment variable would’ve fixed this problem, and I fully expect there to be a documentation out there, staring me right in the face, that this needed to be updated. But I just needed to take the difficult but familar way.

Fast Forward

OwnCloud is actually much easier to install on Docker Swarm than WordPress. Though I speculate OwnCloud may not handle concurrent access well on shared files (See video, below). I would choose to install OwnCloud, over WordPress if you just wanted to try implementing a opensource software on a Docker Swarm Cluster. There are less initial issues.

It is more technical (and requires programming experience) to explain the issues with horizontally scaling WordPress. But those are explained in upcoming post.