Most Internet browsers such as Chrome, Edge, and Firefox have network profilers where you get to view how fast your application serves files. This might give you insight on how your application works, and any bottlenecks that are available. However, this article is only going to compare how the homebuilt webserver I built to serve the CarPoolMashup application, compares to Apache serving the same files, versus running the application within a Docker container, versus running the docker container in something like Amazon AWS ECS.

The network is from a coffee shop’s Wifi Internet Connection. A typical connection speed common to all tests.

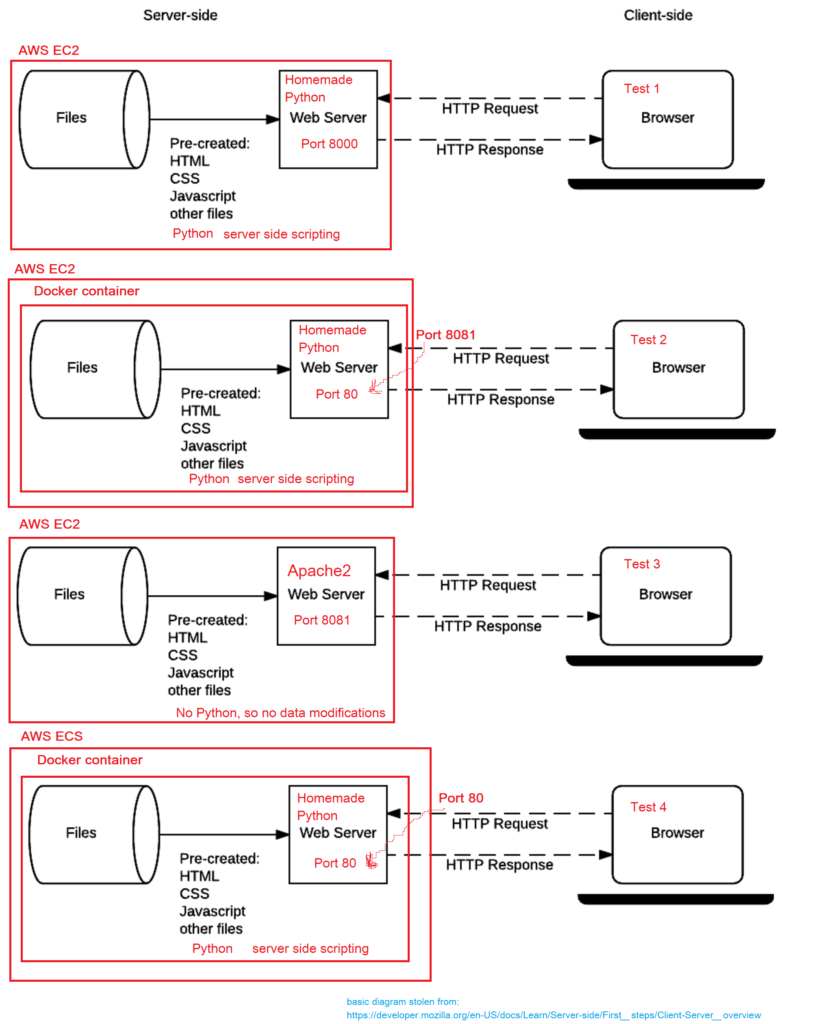

Test Environment, for Web Server

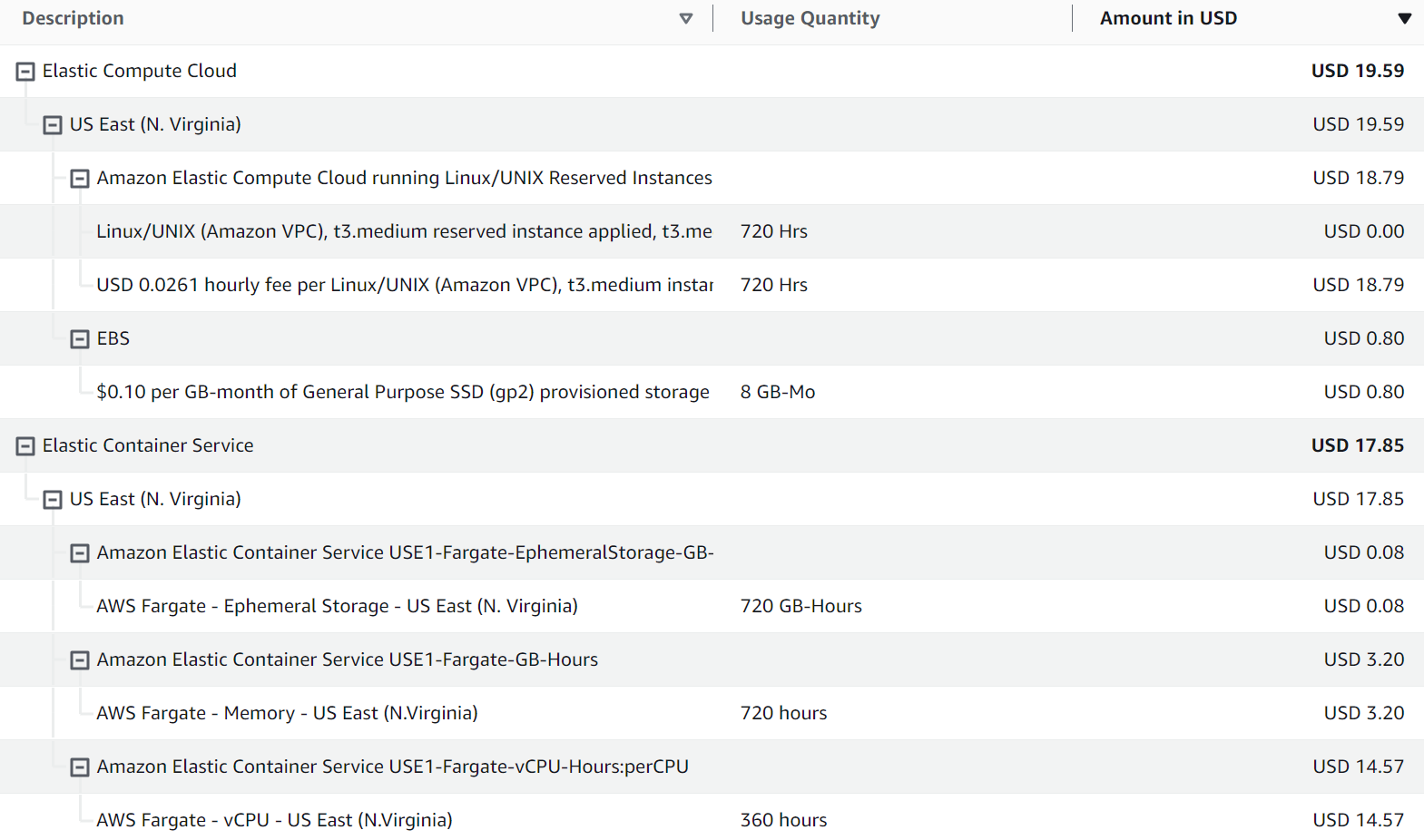

There is no apples-to-apples spec for comparing computing speed, between running the CarPoolMashup application on a VM, and on managed cloud service. So we will compare between 2 same cost products in the Amazon AWS suite of cloud services: ECS and (paid) EC2.

Amazon AWS Elastic Container Service (ECS) has NO FREE TIER. It is charged hourly and will cost about $24/mo if run continuously the entire month. AWS has a Elastic Cloud Computing (EC2) service for VM, and it does have a free tier, where a t2.micro has 720free hours, which is a month. But since we want to compare if we get anything extra by running in ECS, we want an equivelant cost EC2 VM. And t2.medium on annual reserve contract costs about the same. EC2 allows assignment of Elastic IP to the EC2 instance, which means the IP address of the application can be kept the same. ECS doesn’t have this, without a Load balancing solution (more cost) to assign the elastic IP to. And the public IP is temporarily assigned to the container (without load balancer) and is re-assigned a new IPv4 address, if the managed service is rebooted for maintenance.

Test Plan, for Web Server

Now, we will run CarpoolMashup

1. Python application, running on console, on t2.medium VM, with fixed IP

2. CarpoolMashup served from Apache2, on t2.medium VM, with fixed IP

3. CarpoolMashup running inside Python container, on t2.medium VM, with fixed IP

4. CarpoolMashup running inside same Python container, but running in AWS ECS, with temporary IP address.

We are looking to see, if Python can serve application files to browser, as “fast” as purpose built web servers.

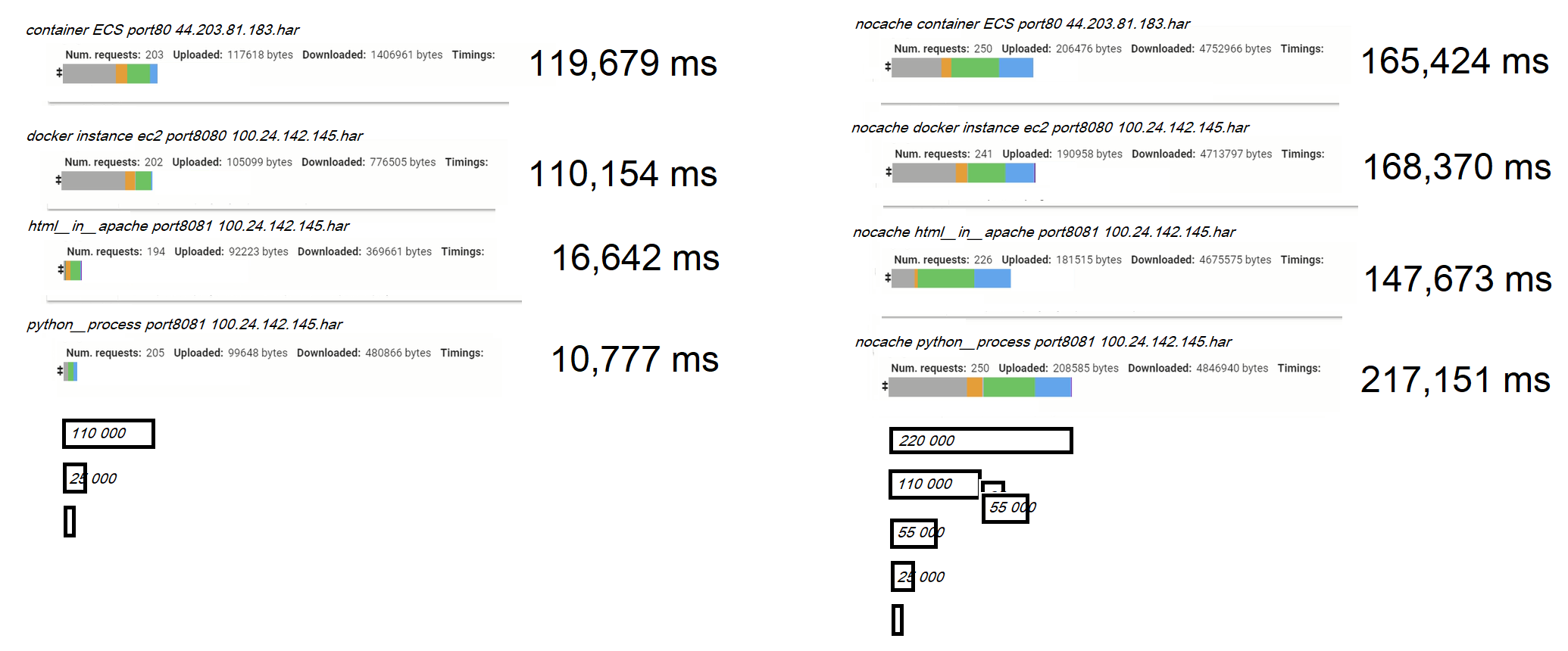

Results of Tests

Below are the results:

Even though there are 4 scenarios listed, you will notice there are 8 results. This is b/c the Network Profiler in the browser, has a option to turn off caching. By turning off caching, you can measure how the web servers perform versus each other. This is the RIGHT column. The numbers are higher than the left column, which contains performance with caching turned on. Which is closer to real world performance, which is what a user will feel.

Caching is a mechanism where the browser doesn’t reload the same files over and over, but knows if a file requested is a duplicate copy it has downloaded already. And it will pull it from local memory, saving network bandwidth for other, more updated files. You will notice there is considerable real world performance increase with all applications with caching on, CarPoolMashup included. The best performance with caching, is experienced when the WebServer is coded to send HTTP headers, indicating if the application supports Caching directives. Apache obviously does natively without any changes to configuration, and all the files take in 16sec, versus the browser requesting every file takes total of 140sec. However, many of these transfers occur in parallel, and case of caching, some transfers are skipped. In the caching case, Apache actually finishing serving the last file at 16sec after clicking versus no-caching at 25sec. But caching feels a lot faster.

For reasons I cannot understand, the performance improvement for caching, running inside docker container or ECS, isn’t as good. And I cannot explain why this is. Perhaps the console output inside the container, has to be sent somewhere, and this is synchronous, in all the threads in the Python application. But the initial notion is that this should be a bigger problem with no caching.

But the caching numbers are not the main purpose of the tests. Just a good to know, how real world use differences would feel like.

We are here to examine if there is any advantage to running the Carpool webserver, in any other platform, just serving files. And this is best compared in the worst case scenario of no-caching. In this case, there is no advantage at all between running the CarpoolMashup web server in docker container in EC2 t2.medium, versus having the container run in serverless EC2. Both take 165sec for all the files to complete transferring.

As expected, there is an advantage to having Apache serve files for CarPoolMashup. The files were changed to be served from Apache, to avoid need for CGI scripting. But I doubt there was any speed gained as most of the JSON was served by XmlHTTP were static files. Apache having many more years of refinement, served the all the MB of files a little bit faster, thru the network, to the browser. Apache transferred all the files in a total of 150sec for all files. The Python web server took 220sec. Again, most of these transfers were in parallel, but we are comparing between the performance between the 2 servers, in “outputting” all the files in the shortest amount of time.

I did not do repeat tests. This was not that “scientific”. But it will work as back of napkin guide. And as such, there wasn’t that much of a difference.

These things that affect differences between tests, based on the platform it is running. Python code can run on different platforms, changing hardware or platform can only only improve

- Connect (how fast SYN/ACK is finished)

- Send (how quickly the GET is sent to receive buffer)

- Wait (how quickly the GET processed, to read the file bytes, and buffer to send)

- receive (how quickly the buffer is flushed, or file is read)

The HTML/Javascript application itself might have bottlenecks intrinsic to itself or the browser it is running. All the tests were run in Chrome.

- Blocked should be a browser or HTTP directive issue, of simultaneous connections

- limited TCP resources

- dns is dependent on DNS server, which is not either apache or python

- SSL time is relevant to only the Google Map servers

Known issues for homebuilt python webserver,

- has to process syn/ack, serially

- but send/wait/receive can be handled in parallel, with as many connections as the web browser requests

- practically no negotiation in HTTP header directives (including caching) has been implemented. (and it doesnt have to, to work, at the minimal functionality level, which is good enough for many things, but not for millions of request and hundreds of simulatenous request per sec)

Apache web server is more advanced and optimized

- it may be able to handle nearly simultaneous incoming connection

- read from file system maybe faster.

- Files may be cached in memory for repeat read performance

- Apache’s probably has correct (or at least optimized) caching directives to send to browser, on what content is cacheable (everything in this case).

Hope this gives you insight on how web applications and web servers are built.

Other results

AWS ECS is inappropriate to use to run a web application server, unless you plan to put in the cost and effort of fronting it with a load balancer, as I’ve read it proscribed. Containers run in AWS ECS, cannot be assigned a Elastic IP, and therefore when the container is transferred to another server, the Public IP address changes.